Network Slicing

In network slicing, the customer and the network operator sign a service level agreement. This agreement specifies the QoS delivered to the network slice of the customer and the price that the customer needs to pay in exchange.

Here fairly splitting the resources between the slices means little to the customers if their share cannot deliver the promised QoS. As a result, resource sharing mechanisms based on utility maximization are not enough. Resource provisioning mechanisms are also needed.

To efficiently meet the QoS requirements of all slices, we propose the following architecture that enables dynamic resource adaptation:

Bandwidth Demand Estimator: monitors online the traffic of the slice and outputs the amount of required resources

Network Slice Multiplexer: decides online which demands to accept if the provisioned resources are not enough for all

The previous architecture needs to be deployed at various nodes that compose the network slice. At the base station of a cellular network, the bandwidth demand corresponds to the physical resource blocks needed by the MAC scheduler of the slice to meet the desired QoS. At a switch, the bandwidth demand may correspond to the weight used by the PGPS algorithm running at its output ports. Also, the Network Slice Multiplexer should be provisioned with enough resources to be able to accept all the bandwidth demands for a high fraction of time. In the following papers, we propose implementations for each network function.

Data-driven Bandwidth Adaptation for Radio Access Network Slices

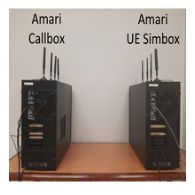

We develop a Bandwidth Demand Estimator that adapts the physical resource blocks allocated to each slice at a base station based on its traffic and packet delay requirements. Note that allocating few resources that are just enough to meet the desired QoS may create large packet queues and hinder the allocation process later on. For this reason, we propose a Reinforment Learning approach that utilizes QoS feedback. We implement the proposed algorithm on a 3GPP compliant testbed by Amarisoft. We significantly reduce the average allocated bandwidth and improve the QoS delivery even for tail delay requirements.

We develop a Bandwidth Demand Estimator that adapts the physical resource blocks allocated to each slice at a base station based on its traffic and packet delay requirements. Note that allocating few resources that are just enough to meet the desired QoS may create large packet queues and hinder the allocation process later on. For this reason, we propose a Reinforment Learning approach that utilizes QoS feedback. We implement the proposed algorithm on a 3GPP compliant testbed by Amarisoft. We significantly reduce the average allocated bandwidth and improve the QoS delivery even for tail delay requirements.

Resource Efficiency vs Performance Isolation Tradeoff in Network Slicing

Robust Resource Sharing via Hypothesis Testing

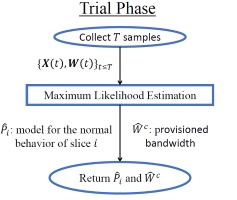

We introduce hypothesis testing in resource sharing to handle the tradeoff between efficiency and isolation without exclusive resource reservation. Our approach comprises two phases. In the trial phase, the operator obtains a stochastic model for each slice that describes its normal behavior, provisions resources and signs the service level agreements. In the regular phase, whenever resource contention occurs, hypothesis testing is conducted to check which slices follow their normal behavior. Slices that fail the test are excluded from resource sharing to protect the well-behaved ones. Results show that our approach fortifies the service level agreements against unexpected traffic patterns with reduced resources.

We introduce hypothesis testing in resource sharing to handle the tradeoff between efficiency and isolation without exclusive resource reservation. Our approach comprises two phases. In the trial phase, the operator obtains a stochastic model for each slice that describes its normal behavior, provisions resources and signs the service level agreements. In the regular phase, whenever resource contention occurs, hypothesis testing is conducted to check which slices follow their normal behavior. Slices that fail the test are excluded from resource sharing to protect the well-behaved ones. Results show that our approach fortifies the service level agreements against unexpected traffic patterns with reduced resources.

End-to-End Resource Adaptation in Mobile Edge Computing (work in progress)

We develop a resource adaptation algorithm for latency sensitive and bitrate sensitive slices. The algorithm adapts the bandwidth in downlink and uplink for each slice based on its current traffic. The latency sensitive slice is composed by OpenRTiST users and the bitrate sensitive slice by file transfer users. The OpenRTiST users create closed-loop traffic that passes through the edge for processing. The file transfer users create one directional traffic. The algorithm is implemented on OpenAirInterface 5G and the experimental setup involves software defined radios and commercial off the shelf mobile devices.

We develop a resource adaptation algorithm for latency sensitive and bitrate sensitive slices. The algorithm adapts the bandwidth in downlink and uplink for each slice based on its current traffic. The latency sensitive slice is composed by OpenRTiST users and the bitrate sensitive slice by file transfer users. The OpenRTiST users create closed-loop traffic that passes through the edge for processing. The file transfer users create one directional traffic. The algorithm is implemented on OpenAirInterface 5G and the experimental setup involves software defined radios and commercial off the shelf mobile devices.